%2024%20(1).png?width=150&height=161&name=Recognised%20CPD%20Badge%20(transparent)%2024%20(1).png)

“Depending on who you speak to, AI could either lead to the destruction of civilisation, or the cure for cancer or both. It could either displace today’s jobs or enable an explosion in future productivity. The truth probably embraces both scenarios.

At the FCA we are determined that, with the right guardrails in place, AI can offer opportunity.”

Source: Nikhil Rathi, FCA CEO, Speech to The Economist on 12 July 2023

What does this mean for financial services?

More FS hubs are seeking to support and encourage innovation and competition which are key drivers for the financial services sector. In the UK, we see these messages echoed in the regulatory business plans and strategy. But what risks and challenges does AI pose to financial services? Should we be concerned and how can we leverage AI? These were some of topics explored by the AIPPF.

The AIPPF was a forum established by the FCA and BoE to encourage discussion and explore the use of artificial intelligence and also machine learning. It had the following objectives:

- Share information about safely adopting AI, the possible challenges and the barriers to adopting AI

- Gather views on the best way to manage AI, principles, guidance, regulation

- Consider whether guidance and regulation is needed

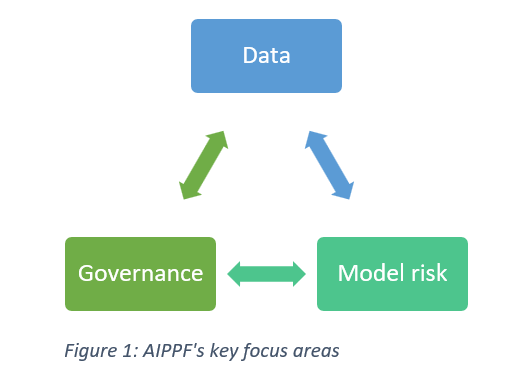

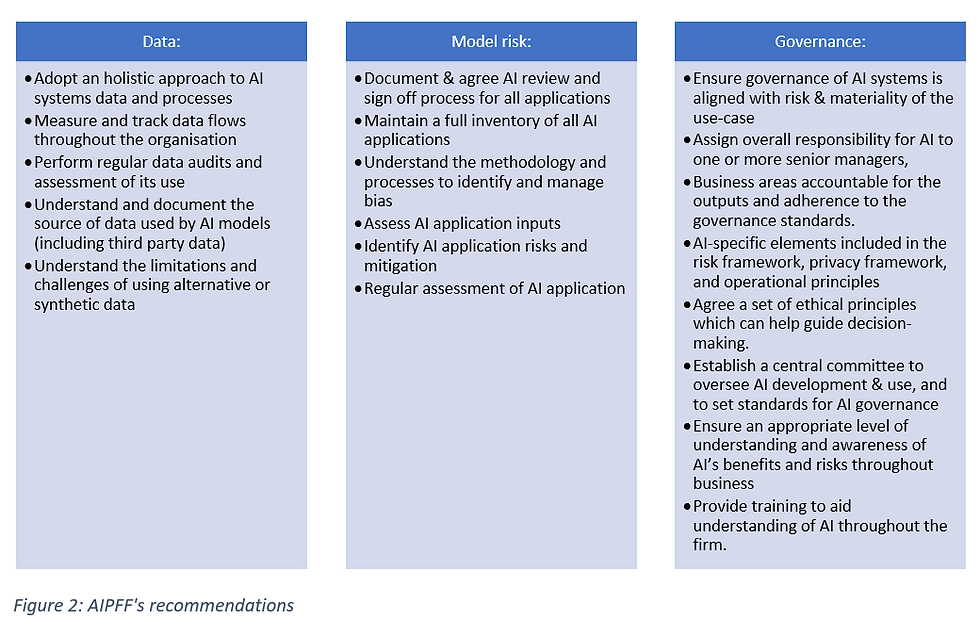

The AIPFF last met in October 2022 and its final report was issued in February 2023, which highlighted three specific areas for action for financial services: Data, Model Risk and Governance.

AI brings the benefit of processing huge amounts of data and identifying patterns that are often hidden, which can be extremely useful to firms. Firms should consider how this information is used. The regulators have encouraged firms to identify behavioural bias in consumer activities and to strive towards encouraging consumers to make better informed decisions, rather than exploit weaknesses.

Model Risk:

Firms should focus on attention on:

- change management

- validation

- monitoring and

- reporting

This means firms should have a clear explanation of AI application risks and controls which are maintained. This last point is important to ensure that as the AI systems change, the risks are managed appropriately, and on a continuing basis.

Governance:

It’s crucial that firms identify and understand the governance of any AI systems. AI systems have the capacity to automate decision making, which brings its additional risk. Firms need to consider effective accountability for AI systems with responsibility clearly defined and agreed governance frameworks.

Regulatory view:

For some time now, we’ve heard UK regulators encouraging firms to embrace technology, for example, by using the regulatory sandboxes to test their new products. Something that has been adopted across the globe.

We also see the regulators highlighting that whilst we should embrace technology, we should be mindful of the risks.

The message is that AI systems should be reviewed as part of a firm’s risk management framework, and to mitigate those risks, you need to consider and identify the risks inherent in the AI systems.

The regulator wants to see firms monitoring the AI systems, not just from a performance aspect, but also in relation to change management. One of the challenges with AI is that their use develops a great speed, so if the AI system changes, firms need to track those changes and monitor what happens next.

What should firms consider when adopting AI?

Adopting AI systems offers huge opportunities for businesses so long as the risks are identified and managed appropriately. In brief, firms should treat AI applications like any other system. Firms need an appropriate method of:

- appraising AI systems to document the rationale for choosing one system over another including benefits and complexity

- identifying potential risk of consumer harm

- measuring consumer harm

- communicating clearly with customer about the use of AI

The above should link back to focusing on the three key areas: Data, Model Risk and Governance:

How Ruleguard can assist:

Ruleguard is an industry-leading software platform designed to help regulated firms manage the burden of evidencing and monitoring compliance. It has a range of tools to help firms fulfil their obligations across the UK, Europe and APAC regions.

With Ruleguard, firms can manage the following AI Risks:

- Map AI risks and controls to applicable regulations, policies, and procedures to facilitate gap analysis and compliance monitoring

- Assign responsibilities to senior managers

- Document and report on control failures and deficiencies, identify issues and schedule remediation tasks

- Provide auditors and regulatory authorities with selective, secure access to compliance and assurance documentation

Please contact us for further information on: Tel: 020 3965 2166 or hello@ruleguard.com

Webinars:

Ruleguard hosts regular events on a various regulatory topics.

To register your interest or learn more about our 2023 events, please click here.

White Papers:

Request a complimentary copy of our White Paper on A Guide to FCA Strategy: 2022-2025 click here.

See our blog page for further articles or contact us via hello@ruleguard.com.

Visit our website to find out more about how Ruleguard can help: https://www.ruleguard.com/platform

Head of Risk & Compliance| Ruleguard

.png?width=400&height=166&name=webinar%20-%20Client%20asset%20protection%20(1).png)

.jpg?width=400&height=166&name=shutterstock_2450801853%20(1).jpg)

.png?width=400&height=166&name=Compliance%20Monitoring%20White%20Paper%20(1).png)